Australian Regulator Reveals AI-Generated Terrorism Content Complaints: Tech Safeguards Under Scrutiny

“A major tech company reported over 250 global complaints about AI-generated deepfake terrorism content, sparking regulatory scrutiny.”

In a world where artificial intelligence (AI) continues to reshape our digital landscape, we find ourselves at a critical juncture. The recent revelation by Australian authorities regarding AI-generated terrorism content has sent shockwaves through the tech industry and regulatory bodies alike. This unprecedented disclosure highlights the urgent need for robust AI content moderation and deepfake detection methods, as well as the challenges that lie ahead in regulating AI-generated content.

As we delve into this complex issue, we’ll explore the implications for tech regulation in Australia, the evolving landscape of online safety, and the global efforts to prevent AI exploitation. Join us as we unpack the latest developments and their potential impact on the future of AI safeguards and digital security.

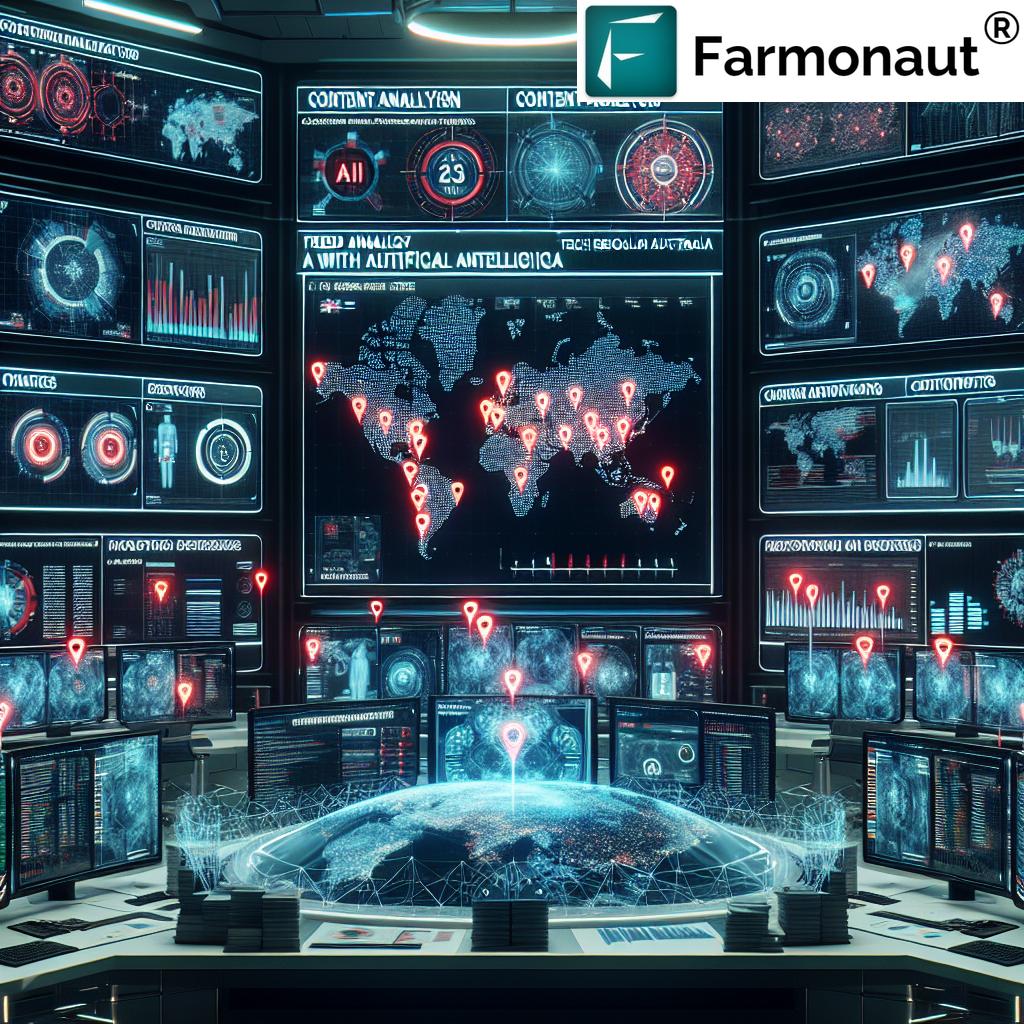

The Alarming Rise of AI-Generated Terrorism Content

The Australian eSafety Commission has brought to light a disturbing trend in the misuse of AI technology. According to their report, Google, a major player in the tech industry, disclosed receiving more than 250 complaints globally over a period of nearly a year. These complaints specifically pertained to the use of Google’s AI software in creating deepfake terrorism material.

This revelation marks a significant milestone in our understanding of the potential misuse of AI technologies. It’s the first time we’ve gained insight into the scale of this problem on a global level, underscoring the critical need for enhanced safeguards and regulatory measures.

The Scope of the Problem

The complaints weren’t limited to terrorism-related content. Google also reported receiving dozens of user reports warning that its AI program, Gemini, was being used to create child abuse material. This alarming development highlights the versatility of AI in generating various forms of illegal and harmful content.

Let’s break down the numbers:

- 258 user reports about suspected AI-generated deepfake terrorist or violent extremist content

- 86 user reports alleging AI-generated child exploitation or abuse material

These figures, while concerning, likely represent only a fraction of the actual instances of AI misuse. Many cases may go unreported or undetected, suggesting that the true scale of the problem could be much larger.

The Response from Tech Companies

In response to these challenges, tech companies like Google are implementing various measures to combat the misuse of their AI technologies. For instance, Google employs hash-matching, a system that automatically matches newly-uploaded images with known images, to identify and remove child abuse material created with Gemini.

However, it’s worth noting that the same system isn’t currently used to weed out terrorist or violent extremist material generated with Gemini. This discrepancy highlights the need for more comprehensive and consistent approaches to content moderation across different types of harmful material.

Regulatory Landscape and Tech Company Reporting Requirements

The Australian government has taken a proactive stance in addressing the challenges posed by AI-generated content. Under Australian law, tech firms are required to supply the eSafety Commission with periodic information about their harm minimization efforts. Failure to comply with these reporting requirements can result in significant fines.

Key aspects of the regulatory landscape include:

- Mandatory reporting periods (e.g., April 2023 to February 2024 in the recent disclosure)

- Detailed information on harm minimization strategies

- Potential fines for non-compliance

These measures are part of a broader push for enhanced online safety regulations in Australia. The goal is to create a more transparent and accountable digital environment, where tech companies are held responsible for the content generated using their platforms and technologies.

Global Implications and Calls for Enhanced Safeguards

The issues highlighted by the Australian eSafety Commission are not unique to Australia. Regulators worldwide have been calling for better guardrails to prevent AI from being used to enable terrorism, fraud, deepfake pornography, and other forms of abuse.

The global nature of these challenges requires a coordinated international response. As AI technology continues to advance rapidly, there’s a growing consensus that current regulatory frameworks may be insufficient to address the potential risks.

“Australian authorities are implementing strict online safety regulations, with significant fines for non-compliant tech firms.”

The Critical Role of AI Content Moderation and Deepfake Detection

As we grapple with the challenges posed by AI-generated content, the importance of robust content moderation and deepfake detection methods cannot be overstated. These technologies serve as the first line of defense against the spread of harmful and illegal content online.

AI Content Moderation: Challenges and Opportunities

AI content moderation involves using machine learning algorithms to automatically identify and filter out potentially harmful or inappropriate content. While this technology has made significant strides in recent years, it still faces several challenges:

- Keeping up with evolving AI-generated content

- Balancing accuracy with processing speed

- Addressing cultural and contextual nuances

- Maintaining transparency and accountability in decision-making processes

Despite these challenges, AI content moderation remains a crucial tool in the fight against online harm. By continually refining these systems and combining them with human oversight, we can create more effective barriers against the spread of AI-generated terrorism content and other forms of harmful material.

Deepfake Detection: A Race Against Time

Deepfakes, or AI-generated synthetic media, pose a particularly challenging problem for content moderators. As the technology behind deepfakes becomes more sophisticated, so too must our methods for detecting them.

Current deepfake detection methods often rely on:

- Analyzing visual inconsistencies

- Detecting unnatural facial movements

- Identifying audio-visual mismatches

- Leveraging blockchain technology for content verification

However, as deepfake technology improves, these detection methods must evolve rapidly to keep pace. The development of more advanced AI-powered detection tools, combined with increased public awareness and media literacy, will be crucial in combating the spread of deepfake terrorism content and other malicious uses of this technology.

Preventing AI Exploitation: A Collective Responsibility

As we’ve seen from the recent disclosures in Australia, preventing AI exploitation is not just the responsibility of tech companies or regulators alone. It requires a collective effort from various stakeholders, including:

- Technology developers

- Government agencies

- Civil society organizations

- End-users

Each of these groups has a crucial role to play in ensuring that AI technologies are developed, deployed, and used responsibly.

The Role of Technology Developers

As the creators of AI systems, technology developers bear a significant responsibility in preventing AI exploitation. This includes:

- Implementing robust safeguards and ethical guidelines in AI development

- Conducting thorough testing to identify potential vulnerabilities

- Continuously updating and improving content moderation systems

- Collaborating with researchers and regulators to address emerging challenges

By prioritizing safety and ethical considerations from the outset, developers can help mitigate the risks associated with AI-generated content.

Government Agencies and Regulatory Bodies

Government agencies, like the Australian eSafety Commission, play a crucial role in setting the regulatory framework for AI technologies. Their responsibilities include:

- Developing and enforcing appropriate regulations

- Conducting ongoing monitoring and assessment of AI risks

- Facilitating cooperation between industry, academia, and civil society

- Promoting public awareness and digital literacy

By striking the right balance between innovation and safety, regulators can help create an environment where AI technologies can flourish without compromising public safety and security.

The Impact on the Agricultural Sector

While the primary focus of the recent revelations has been on terrorism content and child exploitation material, the implications of AI-generated content extend to various sectors, including agriculture. As AI technologies become increasingly integrated into farming practices, it’s crucial to consider both the potential benefits and risks.

AI in Agriculture: Opportunities and Challenges

AI has the potential to revolutionize agriculture through applications such as:

- Precision farming and crop monitoring

- Predictive analytics for yield optimization

- Automated pest and disease detection

- Smart irrigation systems

However, the misuse of AI in agriculture could lead to challenges such as:

- Spread of misinformation about farming practices

- Manipulation of crop yield data

- Potential cybersecurity risks in smart farming systems

As we navigate these challenges, it’s essential to implement robust safeguards and ethical guidelines specific to the agricultural sector.

Farmonaut: Pioneering Responsible AI in Agriculture

In the context of responsible AI use in agriculture, companies like Farmonaut are leading the way. Farmonaut offers advanced, satellite-based farm management solutions that leverage AI and machine learning technologies to improve agricultural productivity and sustainability.

Key features of Farmonaut’s approach include:

- Real-time crop health monitoring using satellite imagery

- AI-driven personalized farm advisory systems

- Blockchain-based traceability solutions for supply chain transparency

- Carbon footprint tracking for sustainable farming practices

By prioritizing data security, transparency, and ethical AI use, Farmonaut demonstrates how AI technologies can be leveraged responsibly in the agricultural sector.

Explore Farmonaut’s innovative solutions:

For developers interested in integrating Farmonaut’s technology into their own solutions, check out the Farmonaut API and API Developer Docs.

The Way Forward: Balancing Innovation and Safety

As we continue to grapple with the challenges posed by AI-generated content, it’s clear that a multi-faceted approach is needed. This approach should balance the need for innovation with the imperative of public safety and security.

Key Strategies for the Future

- Enhanced Collaboration: Fostering closer cooperation between tech companies, regulators, and researchers to develop more effective safeguards.

- Continuous Learning and Adaptation: Ensuring that AI content moderation and deepfake detection systems evolve alongside the technologies they aim to regulate.

- Transparency and Accountability: Implementing clear reporting mechanisms and holding tech companies accountable for the content generated using their platforms.

- Public Education: Increasing digital literacy and raising awareness about the potential risks and identifying AI-generated content.

- Ethical AI Development: Prioritizing ethical considerations and safety measures throughout the AI development process.

The Role of Emerging Technologies

As we look to the future, emerging technologies may offer new solutions to the challenges posed by AI-generated content. Some promising areas of development include:

- Advanced machine learning algorithms for content authentication

- Blockchain-based content verification systems

- Quantum computing applications in cybersecurity

- AI-powered natural language processing for context-aware content moderation

By leveraging these technologies and continuing to innovate, we can work towards creating a safer and more trustworthy digital environment.

AI-Generated Content Complaints and Regulatory Responses

| Category | Estimated Number of Complaints | Regulatory Action | Potential Impact |

|---|---|---|---|

| Deepfake Terrorism Content | 250+ | Mandatory reporting to eSafety Commission | Increased scrutiny on AI safeguards, potential fines for non-compliance |

| AI-Generated Illegal Content | 100+ | Implementation of strict online safety regulations | Enhanced content moderation requirements, legal consequences for violations |

| Child Exploitation Material | 86+ | Mandatory use of hash-matching systems | Improved detection and removal of harmful content, stricter penalties for offenders |

| Overall AI Safety Concerns | 500+ | Development of comprehensive AI regulation framework | Potential restrictions on AI development and deployment, increased industry accountability |

Conclusion: Navigating the AI-Powered Future

The recent revelations from the Australian eSafety Commission serve as a stark reminder of the challenges we face in the age of AI. As we’ve explored throughout this article, the misuse of AI technologies for generating terrorism content and other harmful material poses significant risks to public safety and security.

However, it’s crucial to remember that AI also holds immense potential for positive change, as demonstrated by companies like Farmonaut in the agricultural sector. By implementing responsible AI practices, prioritizing safety and ethical considerations, and fostering collaboration between various stakeholders, we can work towards harnessing the benefits of AI while mitigating its risks.

As we move forward, it’s clear that the path to a safe and responsible AI-powered future will require ongoing vigilance, adaptation, and innovation. By staying informed, supporting responsible AI development, and actively participating in discussions about AI regulation and ethics, we can all play a part in shaping a digital landscape that is both innovative and secure.

Earn With Farmonaut: Affiliate Program

Earn 20% recurring commission with Farmonaut’s affiliate program by sharing your promo code and helping farmers save 10%. Onboard 10 Elite farmers monthly to earn a minimum of $148,000 annually—start now and grow your income!

FAQ Section

- What are the main concerns regarding AI-generated terrorism content?

The primary concerns include the potential for rapid spread of misinformation, radicalization of individuals, and the challenges in detecting and removing such content efficiently. - How are tech companies responding to these challenges?

Tech companies are implementing various measures such as AI-powered content moderation, hash-matching systems, and collaborating with regulatory bodies to enhance their safeguards against misuse of their platforms. - What role do regulatory bodies play in addressing AI-generated content issues?

Regulatory bodies like the Australian eSafety Commission are implementing stricter reporting requirements, enforcing online safety regulations, and working to develop comprehensive frameworks for AI governance. - How can individuals protect themselves from AI-generated misinformation?

Individuals can enhance their digital literacy, verify information from multiple reliable sources, and stay informed about the latest developments in AI and deepfake detection technologies. - What positive applications of AI are being developed in sectors like agriculture?

In agriculture, AI is being used for precision farming, crop monitoring, predictive analytics, and sustainable resource management, as demonstrated by companies like Farmonaut.