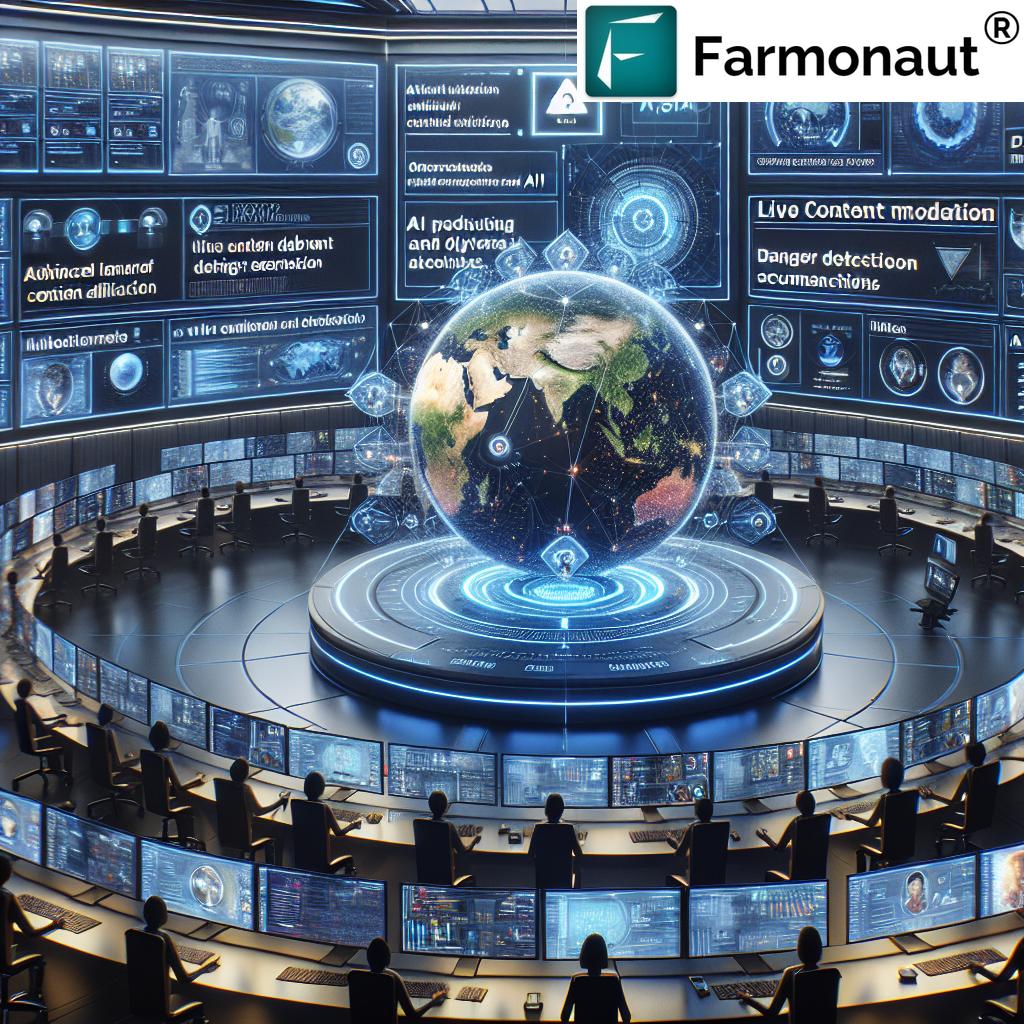

AI Safety Alert: Sydney Authorities Unveil Global Risks of Deepfake Terrorism and Content Moderation Challenges

“A world-first report reveals alarming insights into AI-generated deepfakes, with tech giants receiving user reports of terrorism and exploitation content.”

In the ever-evolving landscape of artificial intelligence (AI) technology, we find ourselves at a critical juncture where innovation and risk intersect. As we delve into the world of AI-generated content, a groundbreaking report from Sydney, Australia, has shed light on the alarming potential for misuse of this powerful technology. This blog post explores the latest developments in AI safety measures, discusses the challenges of content distribution in the digital age, and examines how companies are responding to these emerging threats.

The Unveiling of a Global Concern

The Australian eSafety Commission has recently released a world-first report that provides startling insights into the misuse of AI technology for creating deepfakes and illegal content. This revelation comes as tech giants grapple with an increasing number of user reports detailing the exploitation of AI software to generate terrorism material and child exploitation content.

At the heart of this report is Google’s disclosure to Australian authorities that it received more than 250 complaints globally over a period of nearly a year. These complaints specifically alleged that Google’s AI software was being used to create deepfake terrorism material. Additionally, the tech giant reported dozens of user warnings about its AI program, Gemini, being utilized to produce child abuse material.

The Legal Landscape and Reporting Requirements

Under Australian law, technology companies are required to provide the eSafety Commission with periodic reports on their harm minimization efforts. Failure to comply with these reporting requirements can result in substantial fines. The reporting period covered in this instance spanned from April 2023 to February 2024, offering a comprehensive view of the challenges faced by AI developers and content moderators.

This legal framework underscores the growing recognition of the need for robust online safeguards and effective AI content moderation strategies. As policymakers and industry leaders race to develop comprehensive regulations, the importance of implementing and testing these safeguards becomes increasingly apparent.

The Global Impact of AI Misuse

The implications of this report extend far beyond Australia’s borders. Since the launch of OpenAI’s ChatGPT in late 2022, regulators worldwide have called for enhanced guardrails to prevent AI from being exploited for nefarious purposes such as terrorism, fraud, deepfake pornography, and other forms of abuse.

eSafety Commissioner Julie Inman Grant emphasized the critical nature of these findings, stating, “This underscores how critical it is for companies developing AI products to build in and test the efficacy of safeguards to prevent this type of material from being generated.”

Google’s Response and Content Moderation Efforts

In response to these alarming reports, Google has reiterated its commitment to preventing the generation and distribution of content related to violent extremism, terror, child exploitation, abuse, or other illegal activities. A Google spokesperson stated, “We are committed to expanding on our efforts to help keep Australians safe online.”

It’s important to note that the number of Gemini user reports provided to the eSafety Commission represents the total global volume of user reports, not confirmed policy violations. This distinction highlights the challenges faced in verifying and addressing these reports effectively.

Technological Approaches to Content Moderation

Google has implemented various technological solutions to combat the creation and distribution of harmful content. For instance, the company uses hash-matching, a system that automatically matches newly-uploaded images with known images, to identify and remove child abuse material created with Gemini.

However, the report reveals that Google does not currently employ the same system to weed out terrorist or violent extremist material generated with Gemini. This discrepancy highlights the ongoing challenges in developing comprehensive content moderation strategies across different types of harmful content.

The Broader Context of AI Regulation

As we navigate the complex landscape of AI technology regulation, it’s crucial to consider the broader implications for online safety and responsible AI development. The challenges faced by tech giants like Google underscore the need for a coordinated global effort to address the potential misuse of AI.

“As AI technology advances, policymakers and industry leaders are racing to develop comprehensive regulations to prevent misuse in content creation.”

This race to regulate AI is not just about preventing harm; it’s also about fostering an environment where AI can continue to innovate and benefit society while minimizing risks. As we’ll explore in the following sections, this delicate balance requires collaboration between tech companies, policymakers, and regulatory bodies.

Global AI Safety Measures and Challenges

To better understand the current landscape of AI safety measures worldwide, let’s examine how different regions are approaching this challenge:

| Region/Country | Key AI Safety Regulations | Content Moderation Strategies | Reported AI Misuse Incidents (estimated) | Deepfake Detection Methods | Challenges in Implementation |

|---|---|---|---|---|---|

| United States | AI Bill of Rights (proposed) | Platform-specific policies, AI-assisted moderation | 500+ | Machine learning algorithms, digital watermarking | Balancing free speech with content control |

| European Union | AI Act (in progress) | GDPR compliance, human oversight | 300+ | Blockchain-based authentication, forensic analysis | Harmonizing regulations across member states |

| China | AI Governance Principles | Centralized content review, real-name verification | 200+ | Facial recognition, audio analysis | Balancing innovation with state control |

| Australia | eSafety Commissioner reporting requirements | Mandatory reporting, hash-matching systems | 250+ | AI-powered content analysis, user reporting | Enforcing compliance across international platforms |

This table illustrates the diverse approaches to AI safety across different regions, highlighting the complex nature of regulating AI on a global scale. Each region faces unique challenges in implementing effective measures while balancing innovation, privacy concerns, and cultural differences.

The Role of AI in Agriculture: A Positive Application

While the focus of this blog is on the challenges and risks associated with AI, it’s important to recognize that AI technology also has numerous positive applications across various industries. One such area is agriculture, where AI is revolutionizing farming practices and improving efficiency.

Companies like Farmonaut are at the forefront of this agricultural revolution, leveraging AI and satellite technology to provide farmers with valuable insights and tools for precision agriculture. By using AI for crop health monitoring, personalized farm advice, and resource management, Farmonaut demonstrates how AI can be harnessed for positive impact when proper safeguards and ethical considerations are in place.

The Challenges of Content Distribution in the Digital Age

As we grapple with the implications of AI-generated content, it’s crucial to examine the broader challenges of content distribution in our increasingly digital world. The ease with which information can be shared and amplified online presents both opportunities and risks.

- Viral Spread of Misinformation: AI-generated deepfakes and manipulated content can spread rapidly across social media platforms, making it difficult to contain false information once it’s released.

- Algorithmic Amplification: Content recommendation algorithms can inadvertently promote harmful or misleading content, exacerbating the problem of AI misuse.

- Cross-Platform Challenges: Coordinating content moderation efforts across different platforms and jurisdictions remains a significant hurdle in combating the spread of illegal or harmful AI-generated material.

To address these challenges, a multi-faceted approach is necessary, combining technological solutions, policy interventions, and user education.

Developing Comprehensive AI Regulations

As the potential risks of AI become more apparent, policymakers around the world are working to develop comprehensive regulations to govern the development and use of AI technology. Key areas of focus include:

- Transparency and Explainability: Requiring AI developers to provide clear explanations of how their systems work and make decisions.

- Accountability Measures: Establishing mechanisms to hold companies and individuals responsible for the misuse of AI technology.

- Ethical Guidelines: Developing frameworks to ensure AI is developed and deployed in ways that align with societal values and human rights.

- International Cooperation: Fostering collaboration between nations to create globally consistent standards for AI safety and regulation.

These regulatory efforts aim to strike a balance between fostering innovation and protecting society from potential harm. However, the rapid pace of AI development presents ongoing challenges for policymakers trying to keep up with technological advancements.

The Future of AI Content Moderation

As we look to the future, it’s clear that AI content moderation will play an increasingly important role in maintaining online safety. Some emerging trends and technologies in this space include:

- AI-Powered Detection Systems: Advanced machine learning algorithms that can identify and flag potentially harmful content with greater accuracy.

- Blockchain-Based Content Verification: Using blockchain technology to create immutable records of content origin and modifications.

- Collaborative Filtering: Leveraging user communities and expert networks to improve content moderation accuracy and responsiveness.

- Real-Time Intervention: Developing systems that can detect and intervene in the creation of harmful content before it’s distributed.

While these advancements hold promise, it’s important to remember that technology alone cannot solve all the challenges associated with AI content moderation. Human oversight, ethical considerations, and ongoing policy refinement will remain crucial components of any effective content moderation strategy.

The Role of Tech Companies in Ensuring AI Safety

As the primary developers and deployers of AI technology, tech companies bear a significant responsibility in ensuring the safe and ethical use of their products. Some key areas where companies are focusing their efforts include:

- Robust Testing and Validation: Implementing rigorous testing procedures to identify potential vulnerabilities or misuse cases before releasing AI products to the public.

- Ethical AI Development: Incorporating ethical considerations into the AI development process from the outset, rather than as an afterthought.

- Transparency and User Education: Providing clear information to users about the capabilities and limitations of AI systems, as well as potential risks associated with their use.

- Collaboration with Regulators: Working closely with government bodies and regulatory agencies to develop and implement effective safety measures.

By taking a proactive approach to AI safety, tech companies can help mitigate risks while continuing to drive innovation in the field.

The Importance of Digital Literacy in the Age of AI

As AI-generated content becomes increasingly sophisticated and prevalent, the importance of digital literacy cannot be overstated. Educating users about the potential risks and how to identify AI-generated content is crucial for maintaining a safe online environment. Key aspects of digital literacy in the AI age include:

- Critical Thinking: Encouraging users to question the authenticity and source of online content.

- Understanding AI Capabilities: Providing education on what AI can and cannot do, helping users recognize potential red flags.

- Fact-Checking Skills: Teaching effective methods for verifying information from multiple sources.

- Ethical Considerations: Raising awareness about the ethical implications of creating and sharing AI-generated content.

By empowering users with these skills, we can create a more resilient online community that is better equipped to navigate the challenges posed by AI-generated content.

The Global Impact of AI Safety Measures

As we’ve seen from the report out of Sydney, the challenges associated with AI safety and content moderation are not confined to any single country or region. The global nature of the internet and AI technology necessitates a coordinated international response. Some key considerations for global AI safety measures include:

- Cross-Border Collaboration: Encouraging information sharing and joint efforts between countries to combat AI misuse.

- Harmonizing Regulations: Working towards a set of internationally recognized standards for AI safety and ethical development.

- Addressing Cultural Differences: Recognizing and accommodating varying cultural norms and values in the development of global AI safety measures.

- Supporting Developing Nations: Ensuring that countries with less advanced technological infrastructure are not left vulnerable to AI-related threats.

By taking a global perspective on AI safety, we can work towards creating a safer digital environment for all users, regardless of their location.

Balancing Innovation and Safety in AI Development

As we strive to address the risks associated with AI technology, it’s crucial to maintain a balance that allows for continued innovation. Overly restrictive regulations could stifle progress and prevent the development of beneficial AI applications. Some strategies for achieving this balance include:

- Sandbox Environments: Creating controlled testing environments where new AI technologies can be developed and evaluated without posing risks to the broader public.

- Graduated Regulatory Approaches: Implementing tiered regulatory frameworks that adjust based on the potential risk and impact of different AI applications.

- Public-Private Partnerships: Fostering collaboration between government agencies, academic institutions, and private companies to drive responsible AI innovation.

- Incentivizing Ethical AI: Developing reward systems and recognition programs for companies that prioritize safety and ethical considerations in their AI development processes.

By adopting these approaches, we can create an environment that encourages innovation while maintaining robust safeguards against potential misuse.

The Role of AI in Enhancing Online Safety

While much of our discussion has focused on the risks posed by AI, it’s important to recognize that AI technology also plays a crucial role in enhancing online safety. Some positive applications of AI in this area include:

- Advanced Threat Detection: AI-powered systems can identify and respond to cybersecurity threats more quickly and accurately than traditional methods.

- Improved Content Filtering: Machine learning algorithms can help filter out spam, phishing attempts, and other malicious content from user inboxes and social media feeds.

- Personalized Safety Recommendations: AI can analyze user behavior and provide tailored safety recommendations, helping individuals better protect themselves online.

- Automated Vulnerability Assessments: AI systems can continuously scan for and identify potential vulnerabilities in software and networks, allowing for quicker patching and mitigation.

These applications demonstrate how AI, when developed and deployed responsibly, can be a powerful tool for creating a safer online environment.

Conclusion: Navigating the Future of AI Safety

As we’ve explored throughout this blog post, the challenges posed by AI-generated deepfakes and illegal content are significant and require a coordinated global response. The world-first report from Sydney authorities has shed light on the urgent need for robust online safeguards and effective content moderation strategies.

Moving forward, it’s clear that addressing these challenges will require ongoing collaboration between tech companies, policymakers, and users. By developing comprehensive regulations, implementing advanced content moderation technologies, and fostering digital literacy, we can work towards a future where the benefits of AI technology are realized while minimizing its potential for harm.

As we continue to navigate this complex landscape, it’s crucial to remain vigilant, adaptable, and committed to the responsible development and use of AI technology. Only through these concerted efforts can we ensure that AI remains a force for good in our increasingly digital world.

FAQ Section

Q: What are deepfakes, and why are they concerning?

A: Deepfakes are highly realistic artificial media created using AI technology. They’re concerning because they can be used to create convincing fake videos or images of real people, potentially leading to misinformation, fraud, or reputational damage.

Q: How can I protect myself from AI-generated scams or misinformation?

A: Stay informed about the latest AI technologies, be critical of content you encounter online, verify information from multiple reliable sources, and use reputable fact-checking tools when in doubt.

Q: What are tech companies doing to combat AI misuse?

A: Tech companies are implementing various measures, including AI-powered content moderation, developing deepfake detection tools, collaborating with regulators, and educating users about potential risks.

Q: How can AI be used positively in content moderation?

A: AI can help identify and filter out harmful content more quickly and accurately than human moderators alone. It can also assist in detecting subtle patterns that might indicate coordinated misinformation campaigns.

Q: What role do users play in ensuring AI safety?

A: Users play a crucial role by reporting suspicious content, staying informed about AI capabilities and limitations, practicing good digital hygiene, and supporting responsible AI development through their choices and advocacy.

Earn With Farmonaut: Affiliate Program

Earn 20% recurring commission with Farmonaut’s affiliate program by sharing your promo code and helping farmers save 10%. Onboard 10 Elite farmers monthly to earn a minimum of $148,000 annually—start now and grow your income!

For more information on Farmonaut’s innovative agricultural solutions, check out their API and API Developer Docs.

Download the Farmonaut app:

Access Farmonaut’s web app: